Technical Decription of the Project

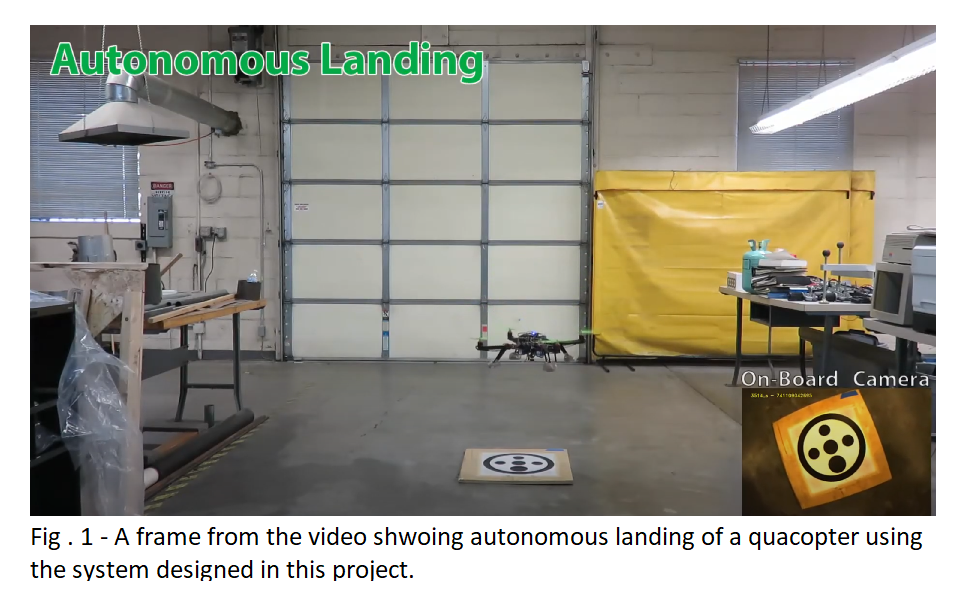

Autonomous takeoff and landing of UAVs requires a precise estimation of the pose (x, y, z, roll, pitch and yaw) relative to landing marker or landing site that cannot typically be accomplished with satellite-based navigation systems at the precision and framerate required by flight control systems (typically equal or greater than 50 Hz). During unmanned flight, and especially during landing procedure, the aircraft must have the capability to make split-second decisions based on the current state of the system. Visual sensors can be successfully used during the landing process since they are able to provide the pose with an accuracy typically greater than GPS, sufficient to complete the autonomous landing task. However, vision data provide a considerable amount of information that must be processed in real time in order to be effective. Because of the need to provide a high-frequency pose estimation for precise localization and control performance especially during takeoff and landing, in this project we explored the use of parallel computing on CPU/GPU for the development of pose estimation algorithms using vision data as aiding sensor. We started with the analysis of the state of art concerning the autonomous landing. We found that only few researches exploit the GPU for on-board image processing, and all of them don’t provide a full pose estimation or exploits high-definition images. The other solutions are based on CPUs or relies on Ground Control Station for image elaboration. In addition, none of them allowed on-board high position estimation rate with high definition of images.  To overcome the limitation of the state of art, we designed a system for pose estimation based on a fiducial marker and an embedded CPU/GPU board. The software is based on parallel computing approach exploiting the high-parallelism of GPUs. We tested the accuracy, reliability of our system through several on-lab and on-field experiments. We then integrated our system with commercially available autopilot, to effectively perform autonomous landing in GPS-denied environments. I found that, thanks to the high parallelism of the GPU, the developed algorithm is able to detect the landing pad and provide a pose estimation with a minimum framerate greater than 30fps, regardless the complexity of the image to be processed. The possibility to estimate the pose with a frame rate greater than 30fps allows a smooth and reactive control of the UAV, enabling the possibility to land the UAV precisely. In particular, the obtained results show that our algorithm is able to provide pose estimation with a minimum framerate of 30 fps and an image dimension of 640x480 pixels, allowing the detection of the landing pad even from several meters of distance. Furthermore, the use of a GPU/CPU embedded board allows the UAV to process the video on-board in real time. This avoids the necessity of a powerful ground control station for image processing that would inevitably increase the delay between the acquisition of the image and the use of the elaborated data from the image.

To overcome the limitation of the state of art, we designed a system for pose estimation based on a fiducial marker and an embedded CPU/GPU board. The software is based on parallel computing approach exploiting the high-parallelism of GPUs. We tested the accuracy, reliability of our system through several on-lab and on-field experiments. We then integrated our system with commercially available autopilot, to effectively perform autonomous landing in GPS-denied environments. I found that, thanks to the high parallelism of the GPU, the developed algorithm is able to detect the landing pad and provide a pose estimation with a minimum framerate greater than 30fps, regardless the complexity of the image to be processed. The possibility to estimate the pose with a frame rate greater than 30fps allows a smooth and reactive control of the UAV, enabling the possibility to land the UAV precisely. In particular, the obtained results show that our algorithm is able to provide pose estimation with a minimum framerate of 30 fps and an image dimension of 640x480 pixels, allowing the detection of the landing pad even from several meters of distance. Furthermore, the use of a GPU/CPU embedded board allows the UAV to process the video on-board in real time. This avoids the necessity of a powerful ground control station for image processing that would inevitably increase the delay between the acquisition of the image and the use of the elaborated data from the image.

This work has been partially funded by the following National Science Foundation (NSF) grant MRI Collaborative: Development of an Intelligent, Autonomous, Unmanned, Mobile

Significance of the Work

Current state of the art for autonomous landing of UAV is mainly based on GPS technology. However, the margin of error with GPS estimates is often significant, which can result in improper landings, especially in environment where the GPS coverage is poor or absent (urban canyons). GPS technology can be used to get the UAV close to the target, but this pose estimation system can provide a precise landing onto the landing pad. Furthermore, this system can also provide altitude and heading measurements, which cannot be accounted for with high accuracy using GPS. Predictability and precision of pose estimation during the landings precision are greatly improved by the proposed system. Furthermore, our system allows the use of ground platforms for recharging/refueling the UAV, in order to extend the UAV’s mission endurance. The system is designed for small-scale rotary-wing UAVs. However, following the same methodology the system can be scaled up and applied to commercial quadrotors and/or helicopters. The current version of the system has a limited size and can be adapted to different electric and gas helicopters. The performance of the system is not affected by the chosen UAV. Several applications could benefit from our work. For example, this system could be integrated into commercial UAVs for delivering packages inside the customer house.

Papers related to this project

- A. Benini, M. J. Rutherford and K. P. Valavanis, “Real-time, GPU-based pose estimation of a UAV for autonomous takeoff and landing.”, 2016 IEEE International Conference on Robotics and Automation (ICRA), Stockholm, 2016, pp. 3463-3470. doi: 10.1109/ICRA.2016.7487525.

- A. Benini, M. J. Rutherford and K. P. Valavanis, “Experimental evaluation of a real-time GPU-based pose estimation system for autonomous landing of rotary wings UAVs”, 2018, Journal of Control Theory and Technology, South China University of Technology and Academy of Mathematics and Systems Science.

Patents related to this project

- A. Benini, M. J. Rutherford and K. P. Valavanis, Image processing for pose estimation - US Patent 10,540,782

- A. Benini, M. J. Rutherford and K. P. Valavanis, Visual landing target - US Patent App. 29/585,482